Facebook apologizes after Safety Check bug

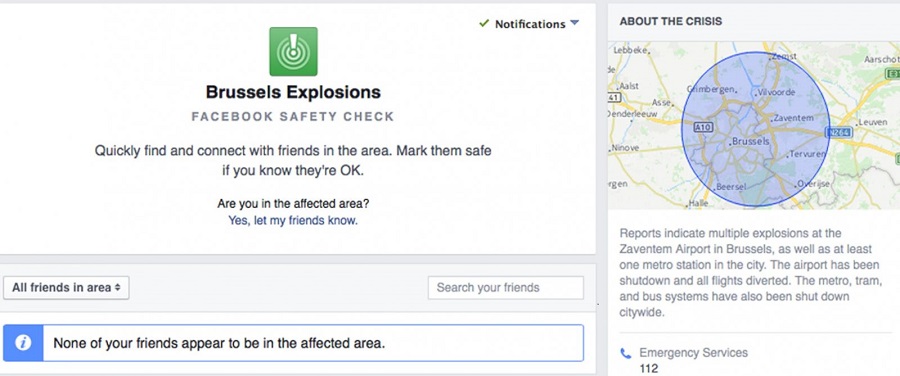

Facebook’s safety check system got itself into a bit of an embarrassment on Sunday, following the devastating bomb attack in a Lahore park that killed over 70 people. The system has been designed to enable people to mark themselves as ‘safe’ following a tragedy such as a natural disaster or a terrorist attack. Being one of the primary platforms on which millions of people communicate, this would seem a sensible feature to deploy. The need for it to be well tested and bug-free was highlighted when Facebook’s system behaved unpredictably on Sunday.

People who were in no way affected by, or even in the country at the time of the explosion found Facebook asking them ‘Are you affected by the explosion?’ A lot of people were left confused by this messaging, several people even being driven to panic at being asked whether they were safe after the explosion – without being told where. Naturally, many such recipients assumed there was a disaster in their vicinity they weren’t aware of yet.

Facebook later wrote a post apologizing for the slip-up, and blamed a ‘bug’ in the system for what happened. Majority of people however seemed positive about the episode, given the intent behind the whole system is focus on safety and community. The majority seem forgiving on this lapse on the part of Facebook, while a few people did caution against such panic-driving messaging without proper checks in place.

Facebook has now started using this feature a lot more widely than earlier, after it was criticized for being selective about the disasters for which it was activated. (It had deployed the feature for the Paris attacks, but had ignored similar attacks in Beirut and elsewhere)

AI systems seem prone to bugs, and need exhaustive testing – especially given that they reach out to such a vast audience, and are quickly capable of being misinterpreted. The recent incident where Microsoft decided to take down it’s AI Twitter chatbot Tay after malicious users quickly trained it to go hateful and racist is a prime example of such an under-tested product. With Tay, Microsoft had to pull the plug in under 24 hours, given what it had turned into.

Safety check is one among several features that Facebook is trying out to get the platform to become more engaging and valuable to its users. “We worked quickly to resolve the issue and we apologize to anyone who mistakenly received the notification” said Facebook.

Also Read:

Microsoft apologizes after it’s AI chatbot Tay turns racist and sexist

OMG-inducing, share-compelling, like-attracting, clutter-breaking, thought-provoking, myth-busting content from the country’s leading content curators. read on...